That is Bare Capitalism fundraising week. 735 donors have already invested in our efforts to fight corruption and predatory conduct, significantly within the monetary realm. Please be a part of us and take part by way of our donation web page, which exhibits learn how to give by way of examine, bank card, debit card, PayPal, Clover, or Smart. Examine why we’re doing this fundraiser, what we’ve completed within the final yr, and our present purpose, supporting our expanded each day Hyperlinks

Yves right here. It is a devastating, must-read paper by Servaas Storm on how AI is failing to fulfill core, repeatedly hyped efficiency guarantees, and by no means can, regardless of how a lot cash and computing energy is thrown at it. But AI, which Storm calls “Synthetic Data” remains to be garnering worse-than-dot-com-frenzy valuations at the same time as errors are if something rising.

By Servaas Storm, Senior Lecturer of Economics, Delft College of Know-how. Initially printed on the Institute for New Financial Pondering web site

This paper argues that (i) we now have reached “peak GenAI” when it comes to present Massive Language Fashions (LLMs); scaling (constructing extra knowledge facilities and utilizing extra chips) is not going to take us additional to the purpose of “Synthetic Normal Intelligence” (AGI); returns are diminishing quickly; (ii) the AI-LLM trade and the bigger U.S. economic system are experiencing a speculative bubble, which is about to burst.

The U.S. is present process a unprecedented AI-fueled financial growth: The inventory market is hovering because of exceptionally excessive valuations of AI-related tech corporations, that are fueling financial development by the lots of of billions of U.S. {dollars} they’re spending on knowledge facilities and different AI infrastructure. The AI funding growth relies on the idea that AI will make staff and corporations considerably extra productive, which is able to in flip increase company earnings to unprecedented ranges. However the summer time of 2025 didn’t deliver excellent news for fans of generative Synthetic Intelligence (GenAI) who have been all overvalued by the inflated promise of the likes of OpenAI’s Sam Altman that “Synthetic Normal Intelligence” (AGI), the holy grail of present AI analysis, could be proper across the nook.

Allow us to extra carefully take into account the hype. Already in January 2025, Altman wrote that “we are actually assured we all know learn how to construct AGI”. Altman’s optimism echoed claims by OpenAI’s accomplice and main monetary backer Microsoft, which had put out a paper in 2023 claiming that the GPT-4 mannequin already exhibited “sparks of AGI.” Elon Musk (in 2024) was equally assured that the Grok mannequin developed by his firm xAI would attain AGI, an intelligence “smarter than the neatest human being”, in all probability by 2025 or not less than by 2026. Meta CEO Mark Zuckerberg stated that his firm was dedicated to “constructing full common intelligence”, and that super-intelligence is now “in sight”. Likewise, Dario Amodei, co-founder and CEO of Anthropic, stated “highly effective AI”, i.e., smarter than a Nobel Prize winner in any subject, may come as early as 2026, and usher in a brand new age of well being and abundance — the U.S. would turn out to be a “nation of geniuses in a datacenter”, if ….. AI didn’t wind up killing us all.

For Mr. Musk and his GenAI fellow vacationers, the most important hurdle on the highway to AGI is the dearth of computing energy (put in in knowledge facilities) to coach AI bots, which, in flip, is because of an absence of sufficiently superior pc chips. The demand for extra knowledge and extra data-crunching capabilities would require about $3 trillion in capital simply by 2028, within the estimation of Morgan Stanley. That may exceed the capability of the worldwide credit score and spinoff securities markets. Spurred by the crucial to win the AI-race with China, the GenAI propagandists firmly imagine that the U.S. may be placed on the yellow brick highway to the Emerald Metropolis of AGI by constructing extra knowledge facilities sooner (an unmistakenly “accelerationist” expression).

Apparently, AGI is an ill-defined notion, and maybe extra of a advertising and marketing idea utilized by AI promotors to steer their financiers to spend money on their endeavors. Roughly, the concept is that an AGI mannequin can generalize past particular examples present in its coaching knowledge, just like how some human beings can do nearly any sort of work after having been proven a number of examples of learn how to do a job, by studying from expertise and altering strategies when wanted. AGI bots might be able to outsmarting human beings, creating new scientific concepts, and doing revolutionary in addition to all of routine coding. AI bots might be telling us learn how to develop new medicines to treatment most cancers, repair world warming, drive our automobiles and develop our genetically modified crops. Therefore, in a radical bout of artistic destruction, AGI would remodel not simply the economic system and the office, but in addition methods of well being care, power, agriculture, communications, leisure, transportation, R&D, innovation and science.

OpenAI’s Altman boasted that AGI can “uncover new science,” as a result of “I feel we’ve cracked reasoning within the fashions,” including that “we’ve an extended option to go.” He “assume[s] we all know what to do,” saying that OpenAI’s o3 mannequin “is already fairly sensible,” and that he’s heard folks say “wow, this is sort of a good PhD.” Asserting the launch of ChatGPT-5 in August, Mr. Altman posted on the web that “We expect you’ll love utilizing GPT-5 way more than any earlier Al. It’s helpful, it’s sensible, it’s quick [and] intuitive. With GPT-5 now, it’s like speaking to an professional — a legit PhD degree professional in something any space you want on demand, they may also help you with no matter your objectives are.”

However then issues started to disintegrate, and relatively shortly so.

ChatGPT-5 Is a Letdown

The primary piece of unhealthy information is that much-hyped ChatGPT-5 turned out to be a dud — incremental enhancements wrapped in a routing structure, nowhere close to the breakthrough to AGI that Sam Altman had promised. Customers are underwhelmed. Because the MIT Know-how Evaluate experiences: “The much-hyped launch makes a number of enhancements to the ChatGPT person expertise. Nevertheless it’s nonetheless far wanting AGI.” Worryingly, OpenAI’s inner assessments present GPT-5 ‘hallucinates’ in circa one in 10 responses of the time on sure factual duties, when linked to the web. Nevertheless, with out web-browsing entry, GPT-5 is unsuitable in nearly 1 in 2 responses, which needs to be troublesome. Much more worrisome, ‘hallucinations’ can also replicate biases buried inside datasets. For example, an LLM may ‘hallucinate’ crime statistics that align with racial or political biases just because it has discovered from biased knowledge.

Of notice right here is that AI chatbots may be and are actively used to unfold misinformation (see right here and right here). In response to current analysis, chatbots unfold false claims when prompted with questions on controversial information matters 35% of the time — nearly double the 18% price of a yr in the past (right here). AI curates, orders, presents, and censors data, influencing interpretation and debate, whereas pushing dominant (common or most popular) viewpoints whereas suppressing options, quietly eradicating inconvenient info or making up handy ones. The important thing problem is: Who controls the algorithms? Who units the foundations for the tech bros? It’s evident that by making it simple to unfold “realistic-looking” misinformation and biases and/or suppress essential proof or argumentation, GenAI does and may have non-negligible societal prices and dangers — which should be counted when assessing its impacts.

Constructing Bigger LLMs Is Main Nowhere

The ChatGPT-5 episode raises critical doubts and existential questions on whether or not the GenAI trade’s core technique of constructing ever-larger fashions on ever-larger knowledge distributions has already hit a wall. Critics, together with cognitive scientist Gary Marcus (right here and right here), have lengthy argued that merely scaling up LLMs is not going to result in AGI, and GPT-5’s sorry stumbles do validate these considerations. It’s changing into extra broadly understood that LLMs usually are not constructed on correct and sturdy world fashions, however as an alternative are constructed to autocomplete, primarily based on refined pattern-matching — which is why, for instance, they nonetheless can’t even play chess reliably and proceed to make mind-boggling errors with startling regularity.

My new INET Working Paper discusses three sobering analysis research displaying that novel ever-larger GenAI fashions don’t turn out to be higher, however worse, and don’t motive, however relatively parrot reasoning-like textual content. As an example, a current paper by scientists at MIT and Harvard exhibits that even when educated on all of physics, LLMs fail to uncover even the prevailing generalized and common bodily rules underlying their coaching knowledge. Particularly, Vafa et al. (2025) notice that LLMs that observe a “Kepler-esque” method: they’ll efficiently predict the subsequent place in a planet’s orbit, however fail to seek out the underlying clarification of Newton’s Regulation of Gravity (see right here). As an alternative, they resort to becoming made-up guidelines, that enable them to efficiently predict the planet’s subsequent orbital place, however these fashions fail to seek out the power vector on the coronary heart of Newton’s perception. The MIT-Harvard paper is defined on this video. LLMs can’t and don’t infer bodily legal guidelines from their coaching knowledge. Remarkably, they can’t even establish the related data from the web. As an alternative, they make it up.

Worse, AI bots are incentivized to guess (and provides an incorrect response) relatively than admit they have no idea one thing. This drawback is acknowledged by researchers from OpenAI in a current paper. Guessing is rewarded — as a result of, who is aware of, it may be proper. The error is at current uncorrectable. Accordingly, it’d nicely be prudent to think about “Synthetic Data” relatively than “Synthetic Intelligence” when utilizing the acronym AI. The bottom line is easy: that is very unhealthy information for anybody hoping that additional scaling — constructing ever bigger LLMs — would result in higher outcomes (see additionally Che 2025).

95% of Generative AI Pilot Tasks in Firms Are Failing

Companies had rushed to announce AI investments or declare AI capabilities for his or her merchandise within the hope of turbocharging their share costs. Then got here the information that the AI instruments usually are not doing what they’re speculated to do and that persons are realizing it (see Ed Zitron). An August 2025 report titled The GenAI Divide: State of AI in Enterprise 2025, printed by MIT’s NANDA initiative, concludes that 95% of generative AI pilot initiatives in firms are failing to boost income development. As reported by Fortune, “generic instruments like ChatGPT [….] stall in enterprise use since they don’t be taught from or adapt to workflows”. Fairly.

Certainly, corporations are backpedaling after reducing lots of of jobs and changing these by AI. For example, Swedish “Purchase Burritos Now, Pay Later” Klarna bragged in March 2024 that its AI assistant was doing the work of (laid-off) 700 staff, solely to rehire them (sadly, as gig staff) in the summertime of 2025 (see right here). Different examples embody IBM, compelled to reemploy workers after shedding about 8,000 staff to implement automation (right here). Current U.S. Census Bureau knowledge by agency dimension present that AI adoption has been declining amongst firms with greater than 250 staff.

MIT economist Daren Acemoglu (2025) predicts relatively modest productiveness impacts of AI within the subsequent 10 years and warns that some purposes of AI might have adverse social worth. “We’re nonetheless going to have journalists, we’re nonetheless going to have monetary analysts, we’re nonetheless going to have HR staff,” Acemoglu says. “It’s going to impression a bunch of workplace jobs which might be about knowledge abstract, visible matching, sample recognition, and so on. And people are primarily about 5% of the economic system.” Equally, utilizing two large-scale AI adoption surveys (late 2023 and 2024) masking 11 uncovered occupations (25,000 staff in 7,000 workplaces) in Denmark, Anders Humlum and Emilie Vestergaard (2025) present, in a current NBER Working Paper, that the financial impacts of GenAI adoption are minimal: “AI chatbots have had no vital impression on earnings or recorded hours in any occupation, with confidence intervals ruling out results bigger than 1%. Modest productiveness features (common time financial savings of three%), mixed with weak wage pass-through, assist clarify these restricted labor market results.” These findings present a much-needed actuality examine for the hyperbole that GenAI is coming for all of our jobs. Actuality isn’t even shut.

GenAI is not going to even make tech staff who do the coding redundant, opposite to the prediction by AI fans. OpenAI researchers have discovered (in early 2025) that superior AI fashions (together with GPT-4o and Anthropic’s Claude 3.5 Sonnet) nonetheless are not any match for human coders. The AI bots failed to know how widespread bugs are or to know their context, resulting in options which might be incorrect or insufficiently complete. One other new research from the nonprofit Mannequin Analysis and Risk Analysis (METR) finds that in observe, programmers, utilizing early 2025-AI-tools, are literally slower when utilizing AI help instruments, spending 19 % extra time when utilizing GenAI than when actively coding by themselves (see right here). Programmers spent their time on reviewing AI outputs, prompting AI methods, and correcting AI-generated code.

The U.S. Economic system at Massive Is Hallucinating

The disappointing rollout of ChatGPT-5 raises doubts about OpenAI’s capacity to construct and market client merchandise that customers are keen to pay for. However the level I need to make isn’t just about OpenAI: the American AI trade as an entire has been constructed on the premise that AGI is simply across the nook. All that’s wanted is enough “compute”, i.e., tens of millions of Nvidia AI GPUs, sufficient knowledge facilities and enough low cost electrical energy to do the large statistical sample mapping wanted to generate (a semblance of) “intelligence”. This, in flip, implies that “scaling” (investing billions of U.S. {dollars} in chips and knowledge facilities) is the one-and-only manner ahead — and that is precisely what the tech corporations, Silicon Valley enterprise capitalists and Wall Road financiers are good at: mobilizing and spending funds, this time for “scaling-up” generative AI and constructing knowledge facilities to assist all of the anticipated future demand for AI use.

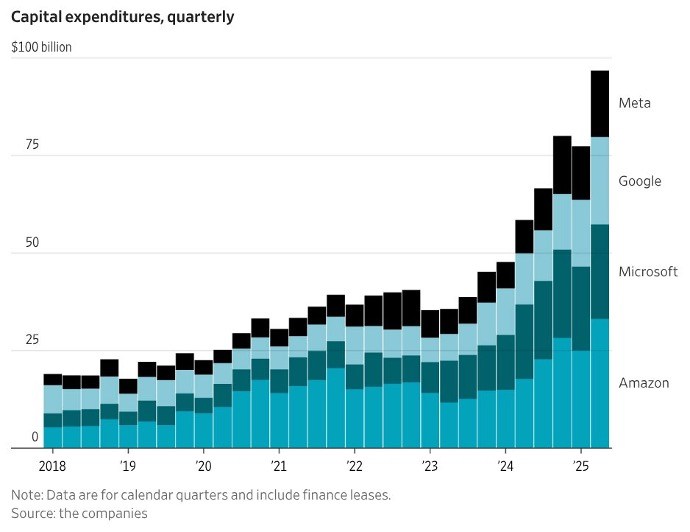

Throughout 2024 and 2025, Massive Tech corporations invested a staggering $750 billion in knowledge facilities in cumulative phrases they usually plan to roll out a cumulative funding of $3 trillion in knowledge facilities throughout 2026-2029 (Thornhill 2025). The so-called “Magnificent 7” (Alphabet, Apple, Amazon, Meta, Microsoft, Nvidia, and Tesla) spent greater than $100 billion on knowledge facilities within the second quarter of 2025; Determine 1 offers the capital expenditures for 4 of the seven firms.

FIGURE 1

Christopher Mims (2025), https://x.com/mims/standing/1951…

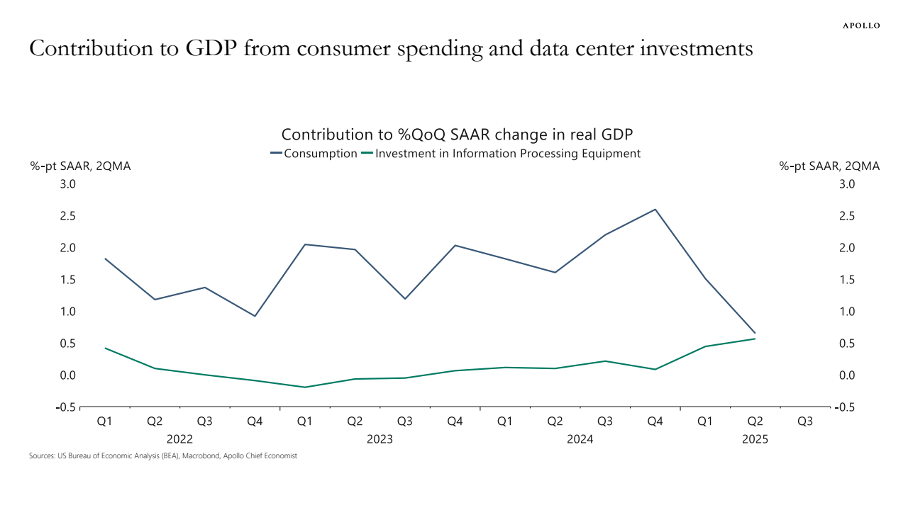

The surge in company funding in “data processing tools” is large. In response to Torsten Sløk, chief economist at Apollo International Administration, knowledge middle investments’ contribution to (sluggish) actual U.S. GDP development has been the identical as client spending over the primary half of 2025 (Determine 2). Monetary investor Paul Kedrosky finds that capital expenditures on AI knowledge facilities (in 2025) have handed the height of telecom spending through the dot-com bubble (of 1995-2000).

FIGURE 2

Supply: Torsten Sløk (2025). https://www.apolloacademy.com/…

Following the AI hype and hyperbole, tech shares have gone by the roof. The S&P500 Index rose by circa 58% throughout 2023-2024, pushed largely by the expansion of the share costs of the Magnificent Seven. The weighted-average share value of those seven firms elevated by 156% throughout 2023-2024, whereas the opposite 493 corporations skilled a median enhance of their share costs of simply 25%. America’s inventory market is essentially AI-driven.

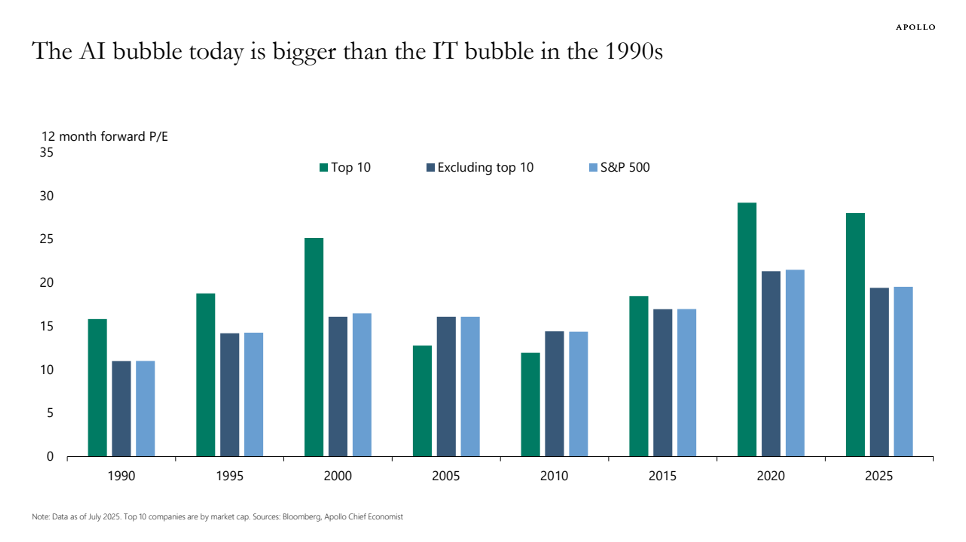

Nvidia’s shares rose by greater than 280% over the previous two years amid the exploding demand for its GPUs coming from the AI corporations; as one of the high-profile beneficiaries of the insatiable demand for GenAI, Nvidia now has a market capitalization of greater than $4 trillion, which is the best valuation ever recorded for a publicly traded firm. Does this valuation make sense? Nvidia’s price-earnings (P/E) ratio peaked at 234 in July 2023 and has since declined to 47.6 in September 2025 — which remains to be traditionally very excessive (see Determine 3). Nvidia is promoting its GPUs to neocloud firms (corresponding to CoreWeave, Lambda, and Nebius), that are funded by credit score, from Goldman Sachs, JPMorgan, Blackstone and different Wall Road personal fairness corporations, collateralized by the information facilities stuffed with GPUs. In key instances, as defined by Ed Zitron, Nvidia provided the neocloud firms, that are loss making, to purchase unsold cloud compute value billions of U.S. {dollars}, successfully backstopping its purchasers — all within the expectation of an AI revolution that also has to reach.

Likewise, the share value of Oracle Corp. (which isn’t included within the “Magnificent 7”) rose by greater than 130% throughout mid-Could and early September 2025 following the announcement of its $300 billion cloud-computing infrastructure take care of OpenAI. Oracle’s P/E ratio shot as much as nearly 68, which implies that monetary buyers are keen to pay nearly $68 for $1 of Oracle’s future earnings. One apparent drawback with this deal is that OpenAI doesn’t have $300 billion; the corporate made a lack of $15 billion throughout 2023-2025 and is projected to make an additional cumulative lack of $28 billion throughout 2026-2028 (see beneath). It’s unclear and unsure the place OpenAI will get the cash from. Ominously, Oracle must construct the infrastructure for OpenAI earlier than it may possibly gather any income. If OpenAI can’t pay for the large computing capability it agreed to purchase from Oracle, which appears probably, Oracle might be left with the costly AI infrastructure, for which it might not be capable to discover different prospects, particularly as soon as the AI bubble fizzles out.

Tech shares thus are significantly overvalued. Torsten Sløk, chief economist at Apollo International Administration, warned (in July 2025) that AI shares are much more overvalued than dot-com shares have been in 1999. In a blogpost, he illustrates how P/E ratios of Nvidia, Microsoft and eight different tech firms are larger than through the dot-com period (see Determine 3). All of us bear in mind how the dot-com bubble ended — and therefore Sløk is true in sounding the alarm over the obvious market mania, pushed by the “Magnificent 7” which might be all closely invested within the AI trade.

Massive Tech doesn’t purchase these knowledge facilities and function them itself; as an alternative the information facilities are constructed by development firms after which bought by knowledge middle operators who lease them to (say) OpenAI, Meta or Amazon (see right here). Wall Road personal fairness corporations corresponding to Blackstone and KKR are investing billions of {dollars} to purchase up these knowledge middle operators, utilizing industrial mortgage-backed securities as supply funding. Information middle actual property is a brand new, hyped-up asset class that’s starting to dominate monetary portfolios. Blackstone calls knowledge facilities one in all its “highest conviction investments.” Wall Road loves the lease-contracts of information facilities which supply long-term steady, predictable revenue, paid by AAA-rated purchasers like AWS, Microsoft and Google. Some Cassandras are warning of a possible oversupply of information facilities, however provided that “the longer term might be primarily based on GenAI”, what may probably go unsuitable?

FIGURE 3

Supply: Torsten Sløk (2025), https://www.apolloacademy.com/…

In a uncommon second of frankness, OpenAI CEO Sam Altman had it proper. “Are we in a section the place buyers as an entire are overexcited about AI?” Altman stated throughout a dinner interview with reporters in San Francisco in August. “My opinion is sure.” He additionally in contrast right now’s AI funding frenzy to the dot-com bubble of the late Nineties. “Somebody’s gonna get burned there, I feel,” Altman stated. “Somebody goes to lose an exceptional sum of money – we don’t know who …”, however (going by what occurred in earlier bubbles) it would most certainly not be Altman himself.

The query due to this fact is: How lengthy buyers will proceed to prop up sky-high valuations of the important thing corporations within the GenAI race stays to be seen. Earnings of the AI trade proceed to pale compared to the tens of billions of U.S. {dollars} which might be spent on knowledge middle development. In response to an upbeat S&P International analysis notice printed in June, 2025 the GenAI market is projected to generate $85 billion in income 2029. Nevertheless, Alphabet, Google, Amazon and Meta collectively will spend almost $400 billion on capital expenditures in 2025 alone. On the similar time, the AI trade has a mixed income that’s little greater than the income of the smart-watch trade (Zitron 2025).

So, what if GenAI simply isn’t worthwhile? This query is pertinent in view of the quickly diminishing returns to the stratospheric capital expenditures on GenAI and knowledge facilities and the disappointing user-experience of 95% of corporations that adopted AI. One of many largest hedge funds on this planet, Florida-based Elliott, advised purchasers that AI is overhyped and Nvidia is in a bubble, including that many AI merchandise are “by no means going to be cost-efficient, by no means going to truly work proper, will take up an excessive amount of power, or will show to be untrustworthy.” “There are few actual makes use of,” it stated, aside from “summarizing notes of conferences, producing experiences and serving to with pc coding”. It added that it was “skeptical” that Massive Tech firms would maintain shopping for the chipmaker’s graphics processing models in such excessive volumes.

Locking billions of U.S. {dollars} in into AI-focused knowledge facilities with no clear exit technique for these investments in case the AI craze ends, solely implies that systemic danger in finance and the economic system is constructing. With data-center investments driving U.S. financial development, the American economic system has turn out to be depending on a handful of firms, which haven’t but managed to generate one greenback of revenue on the ‘compute’ completed by these knowledge middle investments.

America’s Excessive-Stakes Geopolitical Get Gone Mistaken

The AI growth (bubble) developed with the assist of each main political events within the U.S. The imaginative and prescient of American corporations pushing the AI frontier and reaching GenAI first is broadly shared — actually, there’s a bipartisan consensus on how necessary it’s that the U.S. ought to win the worldwide AI race. America’s industrial functionality is critically depending on plenty of potential adversary nation-states, together with China. On this context, America’s lead in GenAI is taken into account to represent a possible very highly effective geopolitical lever: If America manages to get to AGI first, so the evaluation goes, it may possibly construct up an amazing long-term benefit over particularly China (see Farrell).

That’s the reason why Silicon Valley, Wall Road and the Trump administration are doubling down on the “AGI First” technique. However astute observers spotlight the prices and dangers of this technique. Prominently, Eric Schmidt and Selina Xuworry, within the New York Occasions of August 19, 2025, that “Silicon Valley has grown so enamored with engaging in this purpose [of AGI] that it’s alienating most people and, worse, bypassing essential alternatives to make use of the expertise that already exists. In being solely fixated on this goal, our nation dangers falling behind China, which is way much less involved with creating A.I. highly effective sufficient to surpass people and way more centered on utilizing the expertise we now have now.”

Schmidt and Xu are rightly fearful. Maybe the plight of the U.S. economic system is captured finest by OpenAI’s Sam Altman who fantasizes about placing his knowledge facilities in house: “Like, perhaps we construct an enormous Dyson sphere across the photo voltaic system and say, “Hey, it truly is mindless to place these on Earth.”” For so long as such ‘hallucinations’ on utilizing solar-collecting satellites to reap (limitless) star energy proceed to persuade gullible monetary buyers, the federal government and customers of the “magic” of AI and the AI trade, the U.S. economic system is unquestionably doomed.

.png?trim=0,0,0,0&width=1200&height=800&crop=1200:800)