All of us consider the CPU because the “brains” of a pc, however what does that truly imply? What’s going on inside with the billions of transistors that make your laptop work? On this four-part sequence, we’ll be specializing in laptop {hardware} design, masking the ins and outs of what makes a pc operate.

The sequence will cowl laptop structure, processor circuit design, VLSI (very-large-scale integration), chip fabrication, and future tendencies in computing. When you’ve at all times been within the particulars of how processors work on the within, stick round – that is what you must know to get began.

What Does a CPU Really Do?

Let’s begin at a really excessive degree with what a processor does and the way the constructing blocks come collectively in a functioning design. This consists of processor cores, the reminiscence hierarchy, department prediction, and extra. First, we’d like a fundamental definition of what a CPU does.

The best rationalization is {that a} CPU follows a set of directions to carry out some operation on a set of inputs. For instance, this may very well be studying a worth from reminiscence, including it to a different worth, and at last storing the end result again in reminiscence at a distinct location. It may be one thing extra advanced, like dividing two numbers if the results of the earlier calculation was larger than zero.

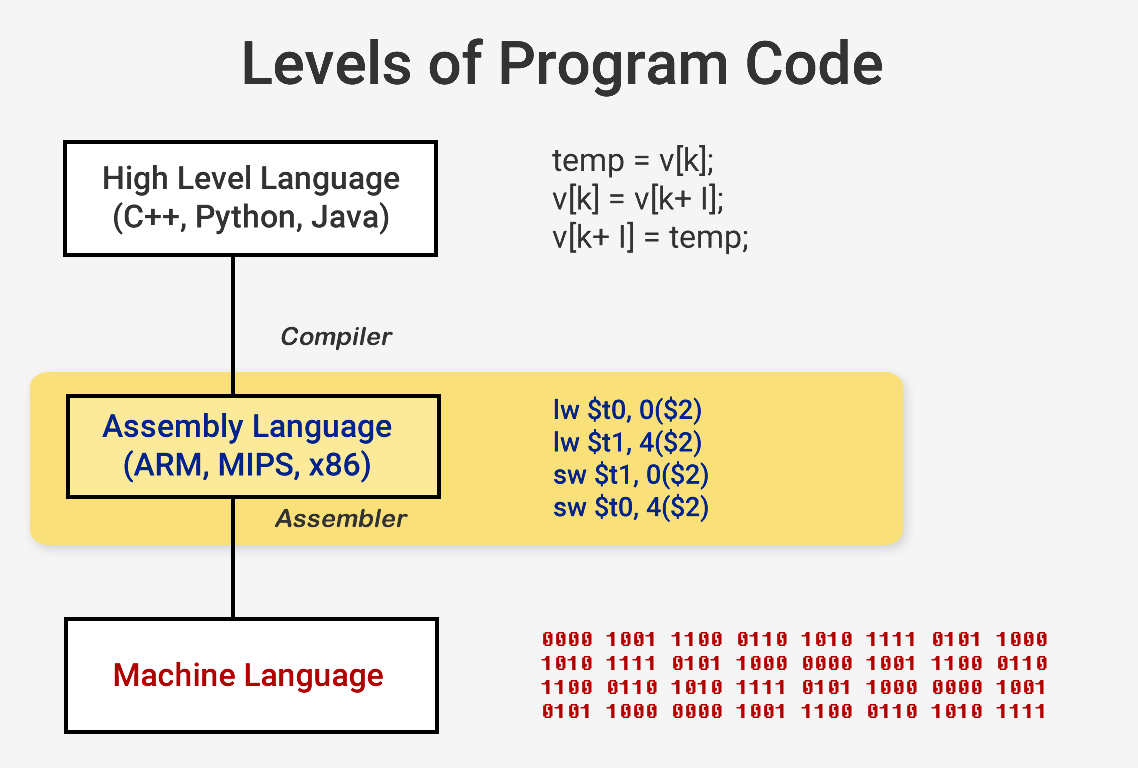

Whenever you wish to run a program like an working system or a recreation, this system itself is a sequence of directions for the CPU to execute. These directions are loaded from reminiscence, and on a easy processor, they’re executed one after the other till this system is completed. Whereas software program builders write their applications in high-level languages like C++ or Python, for instance, the processor cannot perceive that. It solely understands 1s and 0s, so we’d like a solution to symbolize code on this format.

The Fundamentals of CPU Directions

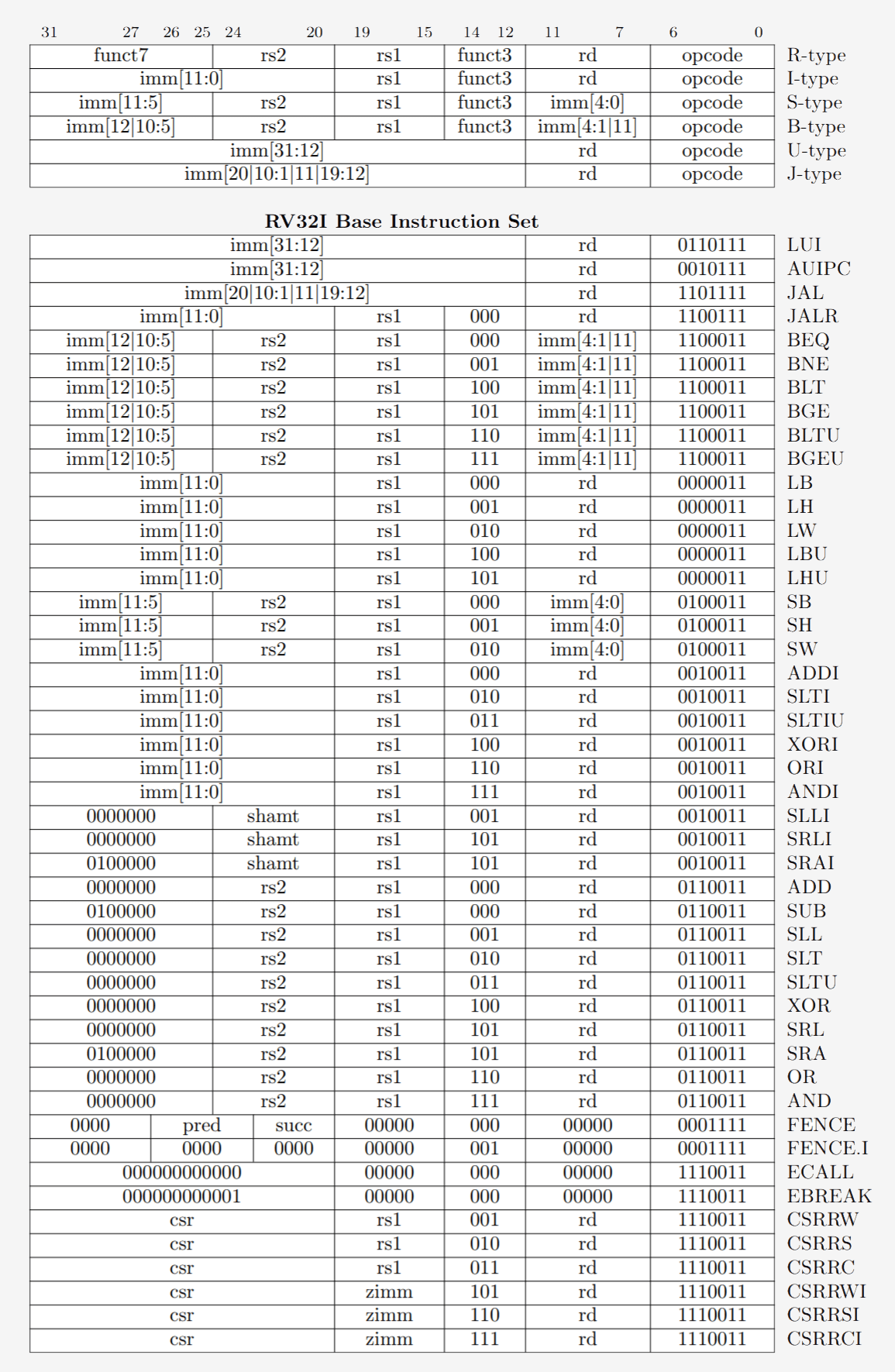

Applications are compiled right into a set of low-level directions known as meeting language as a part of an Instruction Set Structure (ISA). That is the set of directions that the CPU is constructed to grasp and execute. A number of the most typical ISAs are x86, MIPS, ARM, RISC-V, and PowerPC. Identical to the syntax for writing a operate in C++ is totally different from a operate that does the identical factor in Python, every ISA has its personal syntax.

These ISAs could be damaged up into two foremost classes: fixed-length and variable-length. The RISC-V ISA makes use of fixed-length directions, which suggests a sure predefined variety of bits in every instruction determines what sort of instruction it’s. That is totally different from x86, which makes use of variable-length directions. In x86, directions could be encoded in several methods and with totally different numbers of bits for various components. Due to this complexity, the instruction decoder in x86 CPUs is usually essentially the most advanced a part of all the design.

Fastened-length directions enable for simpler decoding as a consequence of their common construction however restrict the whole variety of directions an ISA can help. Whereas the frequent variations of the RISC-V structure have about 100 directions and are open-source, x86 is proprietary, and no person actually is aware of what number of directions exist. Individuals typically consider there are a couple of thousand x86 directions, however the actual quantity is not public. Regardless of variations among the many ISAs, all of them carry primarily the identical core performance.

Now we’re prepared to show our laptop on and begin working stuff. Execution of an instruction really has a number of fundamental components which can be damaged down by means of the various levels of a processor.

Fetch, Decode, Execute: The CPU Execution Cycle

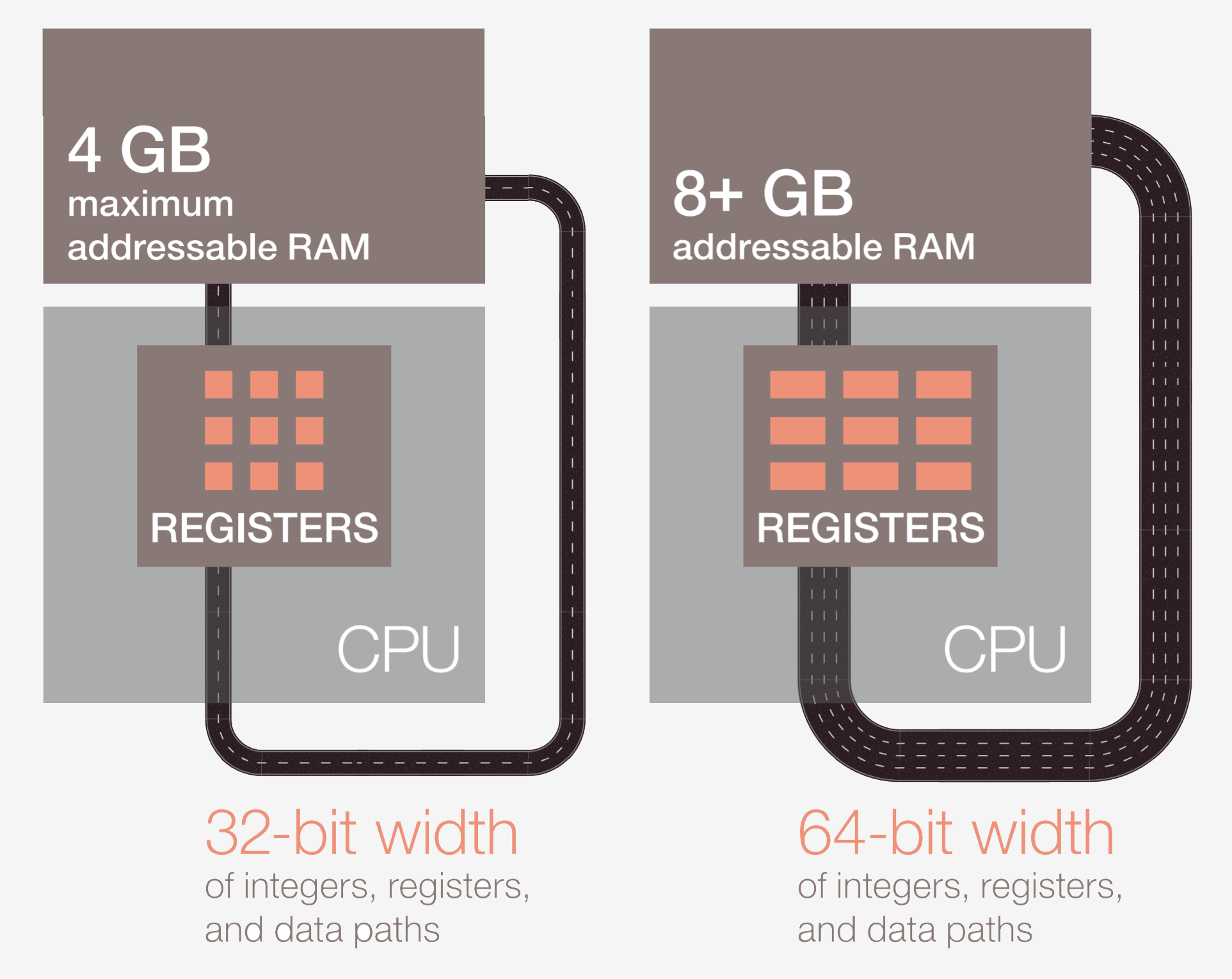

Step one is to fetch the instruction from reminiscence into the CPU to start execution. Within the second step, the instruction is decoded so the CPU can determine what sort of instruction it’s. There are lots of varieties, together with arithmetic directions, department directions, and reminiscence directions. As soon as the CPU is aware of what sort of instruction it’s executing, the operands for the instruction are collected from reminiscence or inside registers within the CPU. If you wish to add quantity A to quantity B, you possibly can’t do the addition till you really know the values of A and B. Most fashionable processors are 64-bit, which signifies that the scale of every knowledge worth is 64 bits.

After the CPU has the operands for the instruction, it strikes to the execute stage, the place the operation is finished on the enter. This may very well be including the numbers, performing a logical manipulation on the numbers, or simply passing the numbers by means of with out modifying them. After the result’s calculated, reminiscence could have to be accessed to retailer the end result, or the CPU might simply preserve the worth in one in every of its inside registers. After the result’s saved, the CPU will replace the state of assorted components and transfer on to the subsequent instruction.

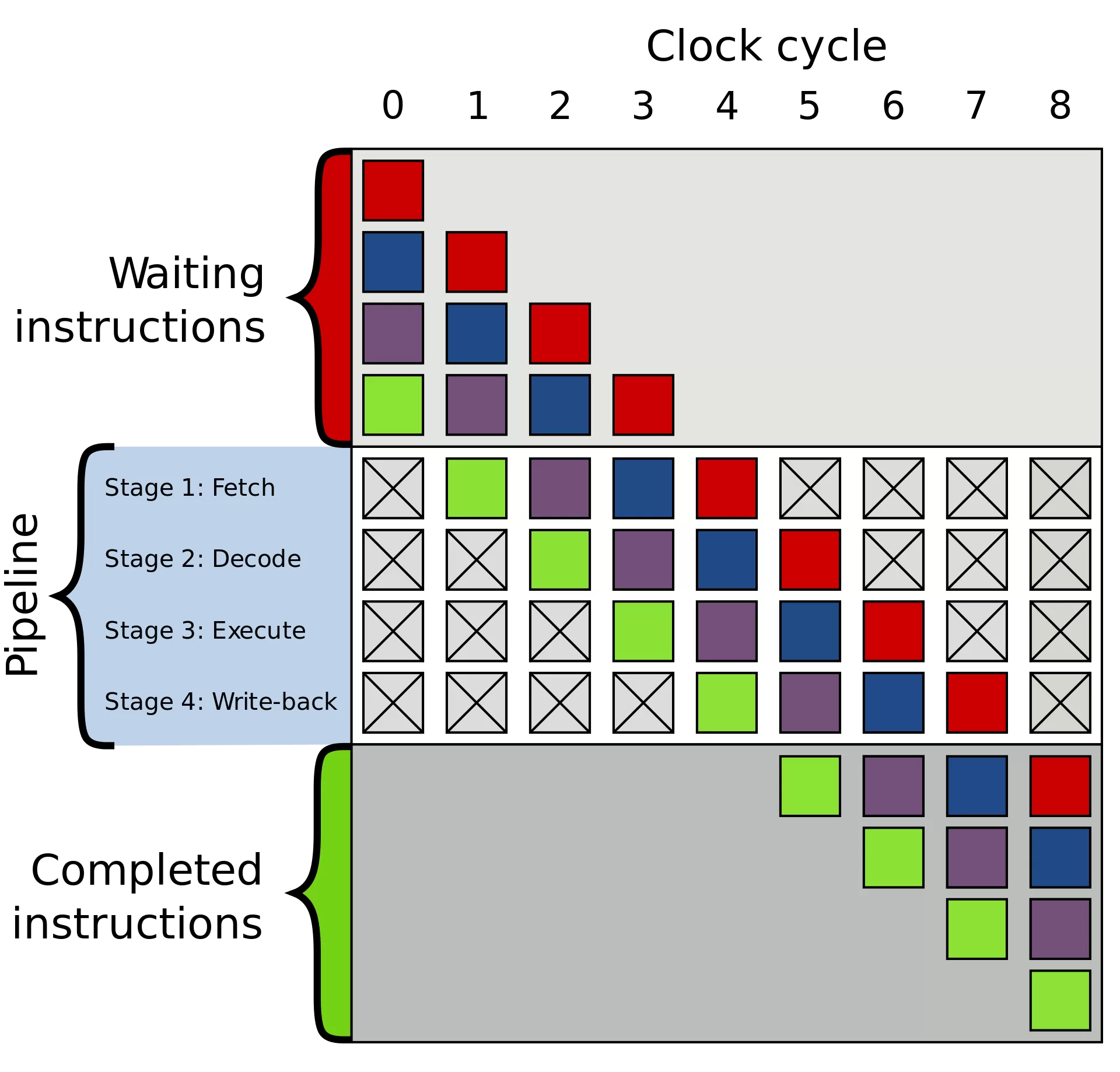

This description is, in fact, an enormous simplification, and most fashionable processors will break these few levels up into 20 or extra smaller levels to enhance effectivity. That signifies that though the processor will begin and end a number of directions every cycle, it might take 20 or extra cycles for anyone instruction to finish from begin to end. This mannequin is usually known as a pipeline because it takes some time to fill the pipeline and for liquid to go absolutely by means of it, however as soon as it is full, you get a relentless output.

Out-of-Order Execution and Superscalar Structure

The entire cycle that an instruction goes by means of is a really tightly choreographed course of, however not all directions could end on the similar time. For instance, addition could be very quick, whereas division or loading from reminiscence could take lots of of cycles. Fairly than stalling all the processor whereas one sluggish instruction finishes, most fashionable processors execute out-of-order.

Meaning they may decide which instruction can be essentially the most helpful to execute at a given time and buffer different directions that are not prepared. If the present instruction is not prepared but, the processor could leap ahead within the code to see if the rest is prepared.

Along with out-of-order execution, typical fashionable processors make use of what is known as a superscalar structure. Because of this at anyone time, the processor is executing many directions directly in every stage of the pipeline. It could even be ready on lots of extra to start their execution. With the intention to execute many directions directly, processors could have a number of copies of every pipeline stage inside.

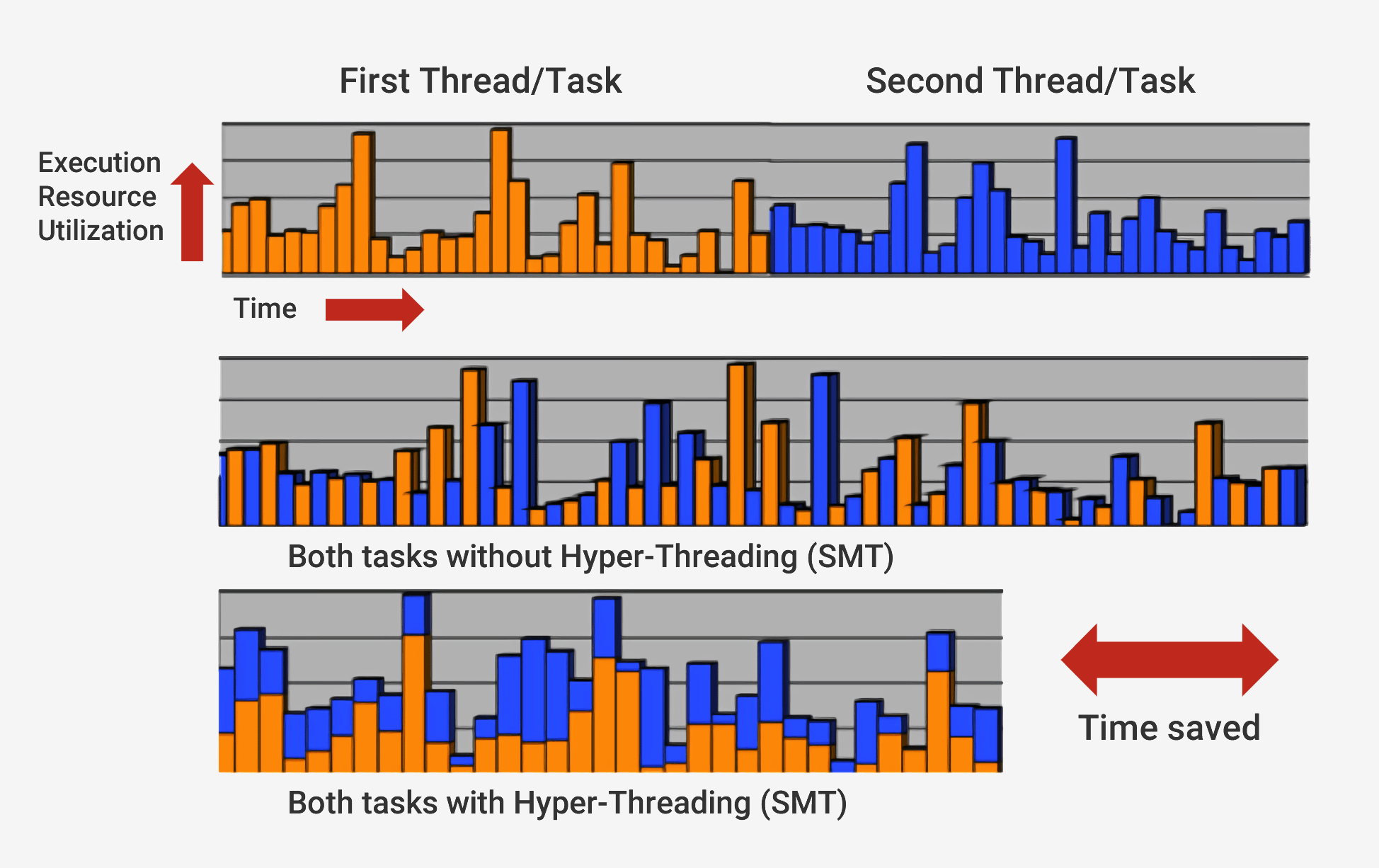

If a processor sees that two directions are able to be executed and there’s no dependency between them, reasonably than anticipate them to complete individually, it can execute them each on the similar time. One frequent implementation of that is known as Simultaneous Multithreading (SMT), also referred to as Hyper-Threading. Intel and AMD processors often help two-way SMT, whereas IBM has developed chips that help as much as eight-way SMT.

To perform this fastidiously choreographed execution, a processor has many further components along with the fundamental core. There are lots of of particular person modules in a processor that every serve a selected objective, however we’ll simply go over the fundamentals. The 2 greatest and most helpful are the caches and the department predictor. Further buildings that we cannot cowl embody issues like reorder buffers, register alias tables, and reservation stations.

Caches: Rushing Up Reminiscence Entry

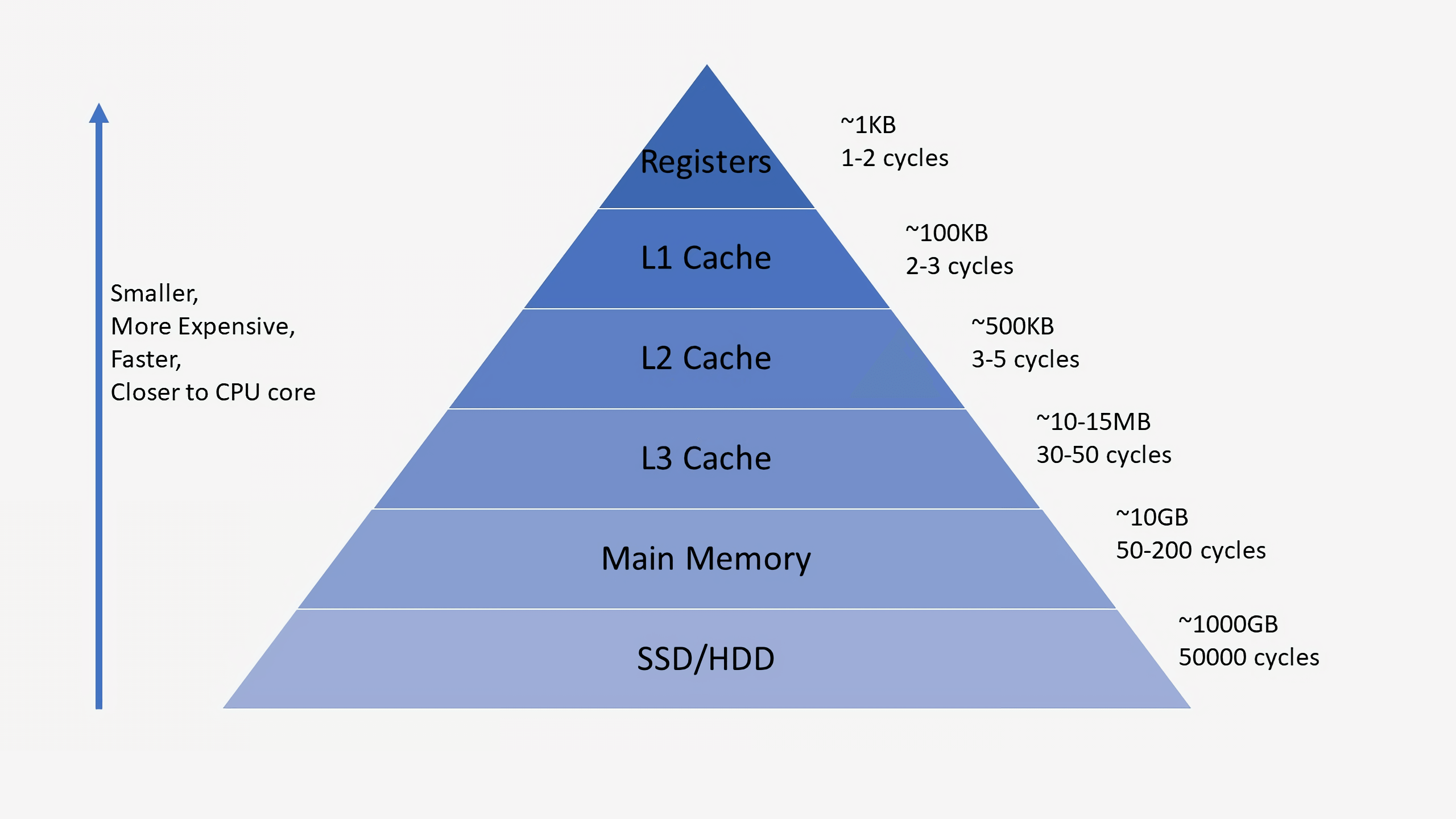

The aim of caches can typically be complicated since they retailer knowledge identical to RAM or an SSD. What units caches aside, although, is their entry latency and velocity. Regardless that RAM is extraordinarily quick, it’s orders of magnitude too sluggish for a CPU. It could take lots of of cycles for RAM to reply with knowledge, and the processor can be caught with nothing to do. If the information is not in RAM, it will probably take tens of hundreds of cycles for knowledge on an SSD to be accessed. With out caches, our processors would grind to a halt.

Processors sometimes have three ranges of cache that kind what is called a reminiscence hierarchy. The L1 cache is the smallest and quickest, the L2 is within the center, and L3 is the most important and slowest of the caches. Above the caches within the hierarchy are small registers that retailer a single knowledge worth throughout computation. These registers are the quickest storage gadgets in your system by orders of magnitude. When a compiler transforms a high-level program into meeting language, it determines one of the simplest ways to make the most of these registers.

When the CPU requests knowledge from reminiscence, it first checks to see if that knowledge is already saved within the L1 cache. Whether it is, the information could be rapidly accessed in just some cycles. If it isn’t current, the CPU will test the L2 and subsequently search the L3 cache. The caches are carried out in a approach that they’re typically clear to the core. The core will simply ask for some knowledge at a specified reminiscence tackle, and no matter degree within the hierarchy that has it can reply. As we transfer to subsequent levels within the reminiscence hierarchy, the scale and latency sometimes enhance by orders of magnitude. On the finish, if the CPU cannot discover the information it’s in search of in any of the caches, solely then will it go to the principle reminiscence (RAM).

On a typical processor, every core could have two L1 caches: one for knowledge and one for directions. The L1 caches are sometimes round 100 kilobytes complete, and dimension could fluctuate relying on the chip and era. There’s additionally sometimes an L2 cache for every core, though it might be shared between two cores in some architectures. The L2 caches are often a couple of hundred kilobytes. Lastly, there’s a single L3 cache that’s shared between all of the cores and is on the order of tens of megabytes.

When a processor is executing code, the directions and knowledge values that it makes use of most frequently will get cached. This considerably quickens execution for the reason that processor doesn’t should consistently go to foremost reminiscence for the information it wants. We are going to speak extra about how these reminiscence methods are literally carried out within the second and third installments of this sequence.

Additionally of observe, whereas the three-level cache hierarchy (L1, L2, L3) stays customary, fashionable CPUs (resembling AMD’s Ryzen 3D V-Cache) have began incorporating further stacked cache layers which have a tendency to spice up efficiency in sure eventualities.

Department Prediction and Speculative Execution

In addition to caches, one of many different key constructing blocks of a contemporary processor is an correct department predictor. Department directions are much like “if” statements for a processor. One set of directions will execute if the situation is true, and one other will execute if the situation is fake. For instance, you might wish to evaluate two numbers, and if they’re equal, execute one operate, and if they’re totally different, execute one other operate. These department directions are extraordinarily frequent and may make up roughly 20% of all directions in a program.

On the floor, these department directions could not look like a difficulty, however they’ll really be very difficult for a processor to get proper. Since at anyone time, the CPU could also be within the means of executing ten or twenty directions directly, it is extremely necessary to know which directions to execute. It could take 5 cycles to find out if the present instruction is a department and one other 10 cycles to find out if the situation is true. In that point, the processor could have began executing dozens of further directions with out even understanding if these have been the right directions to execute.

To deal with this subject, all fashionable high-performance processors make use of a way known as hypothesis. This implies the processor retains monitor of department directions and predicts whether or not a department shall be taken or not. If the prediction is right, the processor has already began executing subsequent directions, leading to a efficiency achieve. If the prediction is wrong, the processor halts execution, discards all incorrectly executed directions, and restarts from the right level.

These department predictors are among the many earliest types of machine studying, as they adapt to department conduct over time. If a predictor makes too many incorrect guesses, it adjusts to enhance accuracy. Many years of analysis into department prediction methods have led to accuracies exceeding 90% in fashionable processors.

Whereas hypothesis considerably improves efficiency by permitting the processor to execute prepared directions as an alternative of ready on stalled ones, it additionally introduces safety vulnerabilities. The now-infamous Spectre assault exploits speculative execution bugs in department prediction. Attackers can use specifically crafted code to trick the processor into speculatively executing directions that leak delicate reminiscence knowledge. In consequence, some points of hypothesis needed to be redesigned to stop knowledge leaks, resulting in a slight drop in efficiency.

The structure of recent processors has superior dramatically over the previous few many years. Improvements and intelligent design have resulted in additional efficiency and a greater utilization of the underlying {hardware}. Nevertheless, CPU producers are extremely secretive in regards to the particular applied sciences inside their processors, so it is inconceivable to know precisely what goes on inside. That being mentioned, the elemental rules of how processors work stay constant throughout all designs. Intel could add their secret sauce to spice up cache hit charges or AMD could add a complicated department predictor, however they each accomplish the identical job.

This overview and first a part of the sequence covers a lot of the fundamentals of how processors work. Within the second half, we’ll talk about how the elements that go right into a CPU are designed, masking logic gates, clocking, energy administration, circuit schematics, and extra.